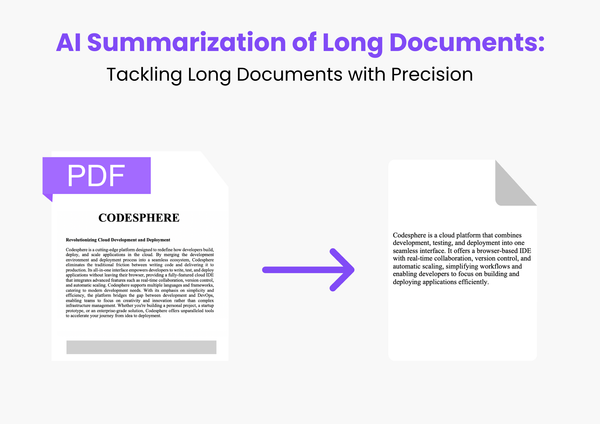

AI Summarization of Long Documents: Tackling Long Documents with Precision

Long texts are difficult to summarize, but recursion can divide them into small parts. The approach is precise and preserves the meaning at any step of iteration.

Information overload – A problem of the AI world. So, regardless if you are analyzing a 1000 page legal document, extracting details from a research paper or providing a short summary of a financial report, there is always a demand for concise and precise summaries. And sure, AI tools have been a game changer for summarization — but they often struggle with very long documents.

Large PDFs, like 1000-page documents, pose a challenge for AI summarizers with strict size limits. Splitting them into smaller pieces seems logical, but it risks losing context. How can we overcome this limitation effectively? Explore this in depth in our article: Taking Advantage of the Long Context of Llama 3.1

In this blog we will try to tackle this issue. We’ll cover:

- The difficulty of summarizing long documents.

- Recursive summarization process: Summarizes even the longest files

- Your complete step-by-step guide to setup the summarization pipeline

Whether you’re a developer, a researcher, or just someone curious about taming large text files – Let’s check it.

The Challenge: Why Long Document Summarization Is Hard

AI summarization tools are developed for speed and accuracy, but they bear some lack:

- Token Limits: Most models, such as GPT-4 or OpenAI's APIs, only take in a fixed number of tokens (about 128,000 for GPT-4). A long PDF can easily exceed that. Even within the token window the accuracy within that limit decreases heavily towards the upper end - more on this here: https://codesphere.com/articles/taking-advantage-of-the-long-context-of-llama-3-1-2

- Context Loss: If we break the document and summarize it in small portions, this breaks up the text into incoherent summarizations.

- Customisability: All models are not one-size-fits-all; what works for a novel doesn't work well for a scientific paper.

This highlights the question of how to summarize long documents without reducing their meaning or context. Enter recursive summarization, a method that combines together the power of chunking, summarization, and iterative refinement.

This has been the challenge we had with one of our recent projects. We were given PDFs that cannot be summarized in one go. It wasn't just the splitting of the text, but preserving meaning, coherence, and flow across each stage of the process. What emerged was a recursive summarization pipeline - a method that iteratively breaks down and processes content, so no detail is left behind.

The Approach: Recursive Summarization

This involves chunking, recursive processing and intelligent summarization pipelines. Here’s how it works:

Step 1: Chunking the Text

It divides the document into chunks so that we can deal with smaller portions rather than processing the complete document as a whole. Here are the scenarios on how to do it. These can be:

- Character-based: Break down text to 1,000-character chunks.

- Semantic-based: Group by topics, paragraphs, or sections.

Step 2: Chunk Summarization

Each chunk passes through a summarization model. These models excel at condensing manageable amounts of text into concise summaries and can be customized with prompts like “focus on keeping reported numbers in the summary”.

The output is a summarized version of each chunk.

Step 3: Combining Summaries

Once the summary of each chunk in the document has been generated, we can then aggregate them into a single summary. In fact, it is more like solving a jigsaw puzzle where the summary of each chunk contributes to a bigger image.

Now, however — same scenario we had for the original document — it is likely this combined summary will be of a length exceeding what can be sent through the model due to token constraints. This is where recursive summarization becomes handy.

How it works:

- First Combination: Concatenate all the small summaries to form an initial but large summary.

- Token Check: Here, we check whether this big summary is over the token limit of the AI model

- If it isn't, then we are done - this is our last summary

- If it is we take another step

- Recursive Summarization: Similar to the approach used above, we split the large summary into smaller chunks again.

- Summarize Again: Each of these smaller pieces is summarized separately, producing another batch of smaller summaries.

- Repeat Until Small Enough: Repeat this—first, summarizing small summaries and then checking the token limit—until the output is small enough to be handled by the AI model and we have our one final summary.

Simplified Tutorial for Summarization

Step 1: Splitting Text into Chunks

First, create a function to divide text into manageable chunks based on length:

def split_text_into_chunks(text, max_tokens=500):

"""

Splits text into chunks of a given token size.

"""

chunks = []

current_chunk = []

current_length = 0

for word in text.split():

word_length = len(word) + 1 # Including space

if current_length + word_length > max_tokens:

chunks.append(" ".join(current_chunk))

current_chunk = [word]

current_length = word_length

else:

current_chunk.append(word)

current_length += word_length

if current_chunk:

chunks.append(" ".join(current_chunk))

return chunks

# Example usage:

text = "Your very long document text goes here..."

chunks = split_text_into_chunks(text)

print(f"Generated {len(chunks)} chunks.")Step 2: Summarize Each Chunk

Use OpenAI's GPT API to summarize each chunk. Here’s an example with minimal configuration:

import openai

openai.api_key = "your_openai_api_key"

def summarize_chunk(chunk):

"""

Summarizes a given text chunk using GPT-4.

"""

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "user", "content": f"Summarize this: {chunk}"}

],

max_tokens=100

)

return response["choices"][0]["message"]["content"]

# Example usage:

summaries = [summarize_chunk(chunk) for chunk in chunks]

print("Summarized Chunks:", summaries)Step 3: Recursively Refine the Summaries

Once all chunks are summarized, recursively combine them into a final summary.

def recursive_summarization(summaries):

"""

Combines and summarizes a list of summaries into a single concise summary.

"""

combined_text = " ".join(summaries)

if len(combined_text.split()) < 500: # Final summary target length

return combined_text

else:

return summarize_chunk(combined_text)

# Example usage:

final_summary = recursive_summarization(summaries)

print("Final Summary:", final_summary)Step 4: Save and Review the Summary

Finally, save the output summary to a file or display it directly.

with open("final_summary.txt", "w") as file:

file.write(final_summary)

print("Summary saved to 'final_summary.txt'")The Trade-offs: Speed vs. Accuracy

Our method is not the fastest—it’s a marathon, not a sprint. Recursive summarization is slow for huge documents, but you get what you wait for:

- Accuracy: context-preserving, meaning-retaining summaries.

- Scalability: Flexible pipeline that can handle documents of any size.

- Customizability: User-adjustable chunk sizes according to different use cases.

Why Recursive Summarization Matters

This approach is a game-changer for:

- Legal professionals summarizing contracts.

- Academics distilling research papers.

- Content creators extracting insights from reports.

- Business professionals dealing with long reports, information overload or other types of long documents.

The Future of Summarization

While our recursive method is effective, there’s always room for innovation:

- Long-form Transformers: Models like Longformer and BigBird can handle longer inputs, reducing the need for recursive summarization.

- Domain Fine-tuning: Custom-trained models on specific datasets can improve accuracy even further.

Conclusion: The Art of Summarization

While summarizing lengthy documents is challenging, it's achievable with the right tools and techniques. Our approach to recursive summarization does not always win in all events—especially, when speed is priority—but it is certainly a great starting point. So, take it one chunk at a time, experiment, and refine.